Printable Base89 LUT Encoding

Mon 20 October 2025

Michael Labbe

#code

Need

In state logic or semantic markup with text strings, there are two options: in-band formatting, or out of band formatting.

In-band formatting indicates changes in a string. For instance:

Hello <span name="first_name">Fred</span>Out-of-band markup involves describing a range of characters as having properties as part of a separate data structure.

const char *text = "Hello Fred";Span spans[] = { {6, 10, "first_name"} // chars 6-9 form "Fred"};Out-of-band markup is ultimately more efficient, but in-band markup has the advantage of being incredibly handy to author in an ad-hoc way, such as when putting together a string literal in a program.

Unfortunately, all of the in-band markup languages are intended for a specific output environment, such as ANSI escape sequences or HTML, and the definitions of them are large, but also limited to the intended use of the defining parties.

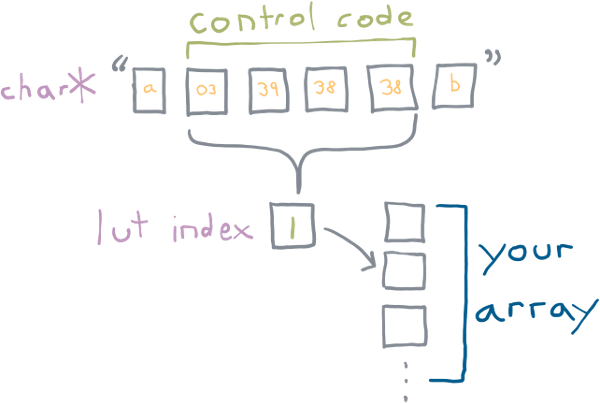

Printable Base89 LUT Encoding addresses this. You embed indices into a lookup table in your string. What is in the lookup table is up to you, the developer.

The following spec only describes how to embed the lookup table indices in a string, not what you could do with it. In practice it is useful for a few things:

-

Semantic markup for theming

-

Styled output that can be emitted to in-game text rendering, console or a browser including font swapping, kinetic type styling and rendering transforms

-

Denoting positional arguments for localization

It’s just an index into your lookup table. You decide.

Introduction

Printable Base89 LUT encoding is used to embed indices into UTF-8 encoded strings. The lookup table’s use is left up to the user.

Printable Base89 LUT encoded control codes have the following properties:

-

The purpose of each index is ascribed by the developer, not the encoding designer

-

Embeddable in UTF-8 encoded strings

-

Viable for systems dev: All control codes display correctly in Visual Studio, gdb, and in terminals

-

Viable for web dev: All control codes log correctly in browsers

-

Trivially skippable: Constant 4-byte length, unlike ANSI/VT codes

-

Specification includes error handling behaviours

-

Compatible by design with C preprocessor concatenation

Control Code

A Printable Base89 LUT control code has the following byte sequence:

ST C1 C2 C303 xx xx xx^ ^ ^| | || LSB MSB|constantxx is a value between [38, 126]. C1 through C3 contain values

that combine to produce an index into a lookup table. C1 is the

LSB, C3 is the MSB.

C1 through C3 all base89 packed integers. They can have a value

between 38 and 126 (inclusive), for a total of 89 possible values.

Why these values? The strings must be printable in any debugger, or dumped to a terminal without being interpreted in a manner that makes review difficult or impossible. The range 38 to 126 are all normal printable single byte UTF-8 characters. In fact, 126 is the last normal, printable character.

But why 38 for a starting value? 37 is the ordinal number of %, which would need to be

escaped if inserted into a C format specifier string. When avoided, we

have a range of 89 possible values per byte.

The three values are combined, with C3 being the MSB.

This forms an index that can be used to lookup into a table which is:

index = (C3 - 38) * 89^2 + (C2 - 38) * 89 + (C1 - 38)The maximum value is calculated as:

max_index = (88 * 89^2) + (88 * 89) + 88 = 704,968The base-10 byte pattern of the maximum value is:

ST C1 C2 C303 126 126 126the base-10 minimum value is:

ST C1 C2 C303 38 38 38The following caveats exist in correct processing of these codes:

-

Index 0 is reserved to indicate an error condition during unpacking. If a code is malformed, index 0 is returned. Make the 0th index in your LUT handle errors.

-

any byte value in

C1-C3outside of the range[38,126]results in an index of 0 — error condition. String processing continues after the sequence. -

If a Printable Base89 LUT code truncates (string does not have three bytes remaining after ST), the index returned is 0 — error condition. String processing terminates, but the part of the string before the truncated Base89 LUT code is still valid.

-

The resulting index can be between 0 and 704968, but oftentimes the lookup will be much smaller. If the index is out of range for a LUT, return index 0 — error condition. Continue string processing.

-

There is no explicit support for escaping ST, eg. having

0x03 0x03in a string to produce a single0x03by a string processor. If verbatim0x03is needed in a string for any reason, use a LUT index to emit it. -

If control codes are not legal inside a part of a string (such as inside quotes), the string processor must handle this according to your quote processing needs.

-

Encoding an out-of-range index is a runtime error to be handled appropriately. Do not silently encode 0.

Example Use For Semantic Markup

It is straightforward to define a Printable Base89 LUT code as a string in C and use it in a preprocessor concatenation:

// note: \x27 is 0x27 which is 39 in base 10#define CODE_RESET "\x03\x27\x26\x26"#define CODE_NAME "\x03\x28\x26\x26"#define NAME(x) CODE_NAME x CODE_RESETconst char str[] = "Hello, " NAME("guy");static const char* ANSI_LUT[] = { // index 0 is always error "ERROR", // reset "\x1b[0m", // name - bold "\x1b[1m",};static const char* HTML_LUT[] = { // index 0 is always error "<ERROR>", // reset "</span>", // name "<span class='name'>",};output_ansi_string(str);output_html_string(str); Admonishment: Security

Exotic use leads to security issues.

Having the ability to look up into a table and perform arbitrary processing while scanning strings will inevitably lead to exotic uses and therefore security abuses.

The functions that actually process strings rarely have the ability to determine whether the string has user input in it. The reality is that everything that manipulates up the stack needs to correctly anticipate what an LUT can do to the whole system state. Because a LUT gets added to during development, managing what processing a user can do to the system at the place in the code where the string is assembled becomes intractable. Do not do unmanageably crazy things in your table logic.

FAQ

Why continue string processing after an error was encountered?

Efficient string processing routines intended for output emit pieces of a string to buffered streams as needed. Doing string processing of arbitrary length likely requires an allocation, and an additional buffer, in addition to what the buffered stream is doing.

A reasonably efficient Printable Base89 LUT string processor scans a string until an ST code is reached, emits the string up until that point, processes the code, and then continues to scan. This is preferred to copying the string into a buffer and emitting it all at once.

Is it possible to directly encode index 0?

LUT index 0 is reserved for the error case. You should not use it for

a normal sequence. It is possible to encode index zero using the sequence 0x03 0x26 0x26 0x26 in your string, though there is no

reason to do so.

This is preferred to adding one to the processed code, which just adds complexity.

What happens if untrusted input contains a control code?

All untrusted input needs to scan for the ST 0x03 byte, and have it

validated or removed.

This is nothing new. ANSI escape sequences in untrusted user input can also affect output, and need to be validated or removed.

If I Implement This, Where Should I Link to the Spec?

This article’s slug is the permalink you are looking for.

Links To Implementations

-

C single header file: ftg_base89.h

-

Python module py-base89lut

Software Adoption Revolutions Come from Architectural Shifts, Not Performance Bumps

Tue 30 September 2025

Michael Labbe

#code

Choosing what to work on is one of the most interesting parts of building software. As developers, we often see tooling with suboptimal efficiency and aspire to rewrite it. In many cases, the performance gains can be substantial — but historically it has been user productivity gains that drive adoption in the large, not simply execution performance.

When looking at the history of software adoption revolutions, the productivity of the user was the dominating factor in making significant, sweeping changes to how we develop. Execution time, exclusive of other improvements, is commonly not sufficient to increase productivity enough to produce a large-scale software adoption revolution.

The more impactful thing we can do in designing software is to rethink the solution to the problem from first principles.

Key examples in the history of software that shaped current usage at large:

-

Interactive time-sharing terminals replaced batch-processed punch cards, giving a 10x increase in the number of feedback cycles in a day. Faster punch card processing would not have competed with this shift.

-

Software purchased and updated online: more stores and efficient disc storage could not win over the high convenience of automated bit synchronization afforded by platforms like Steam.

-

Owning server racks versus on-demand cloud: In many cases, developers traded provisioning time and cost for execution time and cost. This enabled a generation of developers to explore a business problem space without making a capital-intensive commitment to purchase server hardware upfront.

-

Virtualization: Isolated kernel driver testing, sandboxed bug reproduction, and hardware simulation greatly reduced the provisioning time necessary to reproduce system bugs. This underpins the explosion in kernel fuzzing and systems continuous integration (CI). Faster computers alone would not have delivered the same productivity multiplier.

-

Live linked game assets: Artists get real-time feedback when they make geometry changes to assets in modelling tools. While optimized exporters and importers are still important, this alone could not result in the productivity multiplier that comes from real-time feedback.

-

Declarative, immutable runtimes: Docker vastly reduced environment setup time. Consequently, this massively reduced guesswork as to whether a bug was isolated to a mutable execution environment. Highly optimized test environment provisioning would not have produced these productivity gains at the same scale.

Some of these productivity improvements, unfortunately, came with performance regressions. And yet, the software adoption revolutions occurred nonetheless.

Much of the software we use could benefit from being significantly faster. Reimplementing that software for better performance is like running farther in the same direction. Rearchitecting the software is like running in an entirely different direction. You should go as far as you can in the best direction.

We should write performant software because computing should be enjoyable, because slow software inefficiencies add up, and because high performance software is a competitive edge. The first step, however, is to consider how best to solve the problem. Performance improvements alone may not be deeply persuasive to large groups of users seeking productivity.

What is Grain DDL?

Fri 09 May 2025

Michael Labbe

#code

Every modern game is powered by structured data: characters, abilities, items, systems, and live events. But in most studios, this data is fractured—spread across engine code, backend services, databases, and tools, with no canonical definition. Your investment in long-lived game data is tied to code that rots.

Grain DDL fixes that. Define your data once and generate exactly what you need across all systems. No drift. No duplication. No boilerplate. Just one source of truth.

Game data is what gives your game its trademark identity — its feel, the sublety of balance, inventory interactions, motion, lighting and timing. Game data is where your creative investment lives, and you need to plan for it to outlive the code.

Grain DDL is a new data definition language — a central place to define and manage your game’s data models. Protect your investment in your game data by representing it in a central format that you process, annotate, and control.

Typically, games represent data by conflating a number of things:

- What types does the data use?

- How and where are those types encoded in memory?

- How do those types constrain potential values?

Game data has a lifecycle: represented by a database, sent across a network wire, manipulated by a schema-specific UI and serialized to disk. In each step, a developer binds their data schema to their implementation.

- The types can be converted to and from database types

- The data might be serialized and deserialized from JSON in a web service language like Go

- The data needs to be securely validated and read from the wire into a C++ data structure

- The types likely need a UI implementation for author manipulation

In each of these steps, there is imperative code that binds the schema to an implementation. More importantly, there is no longer a canonical representation of a central data model. There are only partial representations of it strewn across subsystems, usually maintained by separate members of a game team.

Grain DDL protects your data investment by hoisting the data and its schema outside of implementation-specific code. You write your code and data in Grain DDL, and then generate exactly the representation you need.

You Determine What Is Generated

Grain DDL comes in two parts: a data definition language, and Code Generation Templates. Consider this simple Grain definition:

struct Person { str name, u16 age,}From there, you can generate a C struct with the same name using code generation templates:

/* for each struct */range .structs `typedef struct {`. /* for each struct field */ range .fields tab(1) c_typename(.type) ` ` .name `;`. end `} ` camel(.name) `_t;`; endYou get:

typedef struct { char *name; uint16_t age; } person_t;Code Generation Templates are a new templating language designed to make it ergonomic to generate source code in C++-like languages. Included with Grain DDL, they are the standard way to maintain code generation backends. Code Generation Templates contains a type system, a standard library of functions, a const expression evaluator and the ability to define your own functions inside of the templates.

However, if Code Generation Templates do not suit your needs, Grain DDL offers a C API, enabling you to walk your data models and types to perform any analysis, presorting or code generation you require. All of this happens at compile time, preventing the need for runtime complexity.

Having a specialized code generation templating system makes code generation maintainable. This is crucial to retaining the investment in your data.

Native Code First

Grain DDL’s types are largely isomorphic to C plain old data types. Grain is designed to produce data that ends up in a typed game programming language like C, C++, Rust, or C#. Philosophically, Grain DDL is much closer to bit and byte manipulation environments than it is a high-level web technology with dictionary-like objects and ill-defined Number types.

Even so, you can use Grain DDL to convert your game data to and from JSON and write serializers for web tech where necessary. It can also be used to specify RESTful interfaces and their models, bypassing a need to rely on OpenAPI tooling.

Crucially, Grain DDL never compromises on the precision required to specify data that runs inside a game engine.

Data Inheritance

Grain DDL lets you define base data structures, and then derive from them, inheriting defaults where derived fields are not specified. Consider:

blueprint struct BaseArmor { f32 Fire = 1.0, f32 Ice = 1.0, f32 Crush = 1.0,}struct WoodArmor copies BaseArmor { f32 Fire = BaseArmor * 1.5}In this case, WoodArmor inherits Ice and Crush at 1.0, but Fire has a +50% increase. This flexible spreadsheet-like approach to building out combat tables for a game gives you a ripple effect on data that immediately permeates all codebases in a project.

Unlimited Custom Field Attributes

Grain DDL is the central definition for your types and data. In some locations, you need data in addition to its name and type to fully specify your type. Consider how health can limited to a range smaller than the type specifies:

struct player { i8 health = 100 [range min = 0, max = 125]}Attributes in Grain DDL are a way of expressing additional data about a field, queryable during code generation. However, it is possible to define an new, strongly typed attribute that represents your bespoke needs:

attr UISlider { f32 min = -100.0, f32 max = +100.0, f32 step = 1.0,}struct damage { f32 amount [UISlider min = 0.0]}This defines a new attribute UISlider which hints that any UI that manipulates damage.amount should use a slider, setting these parameters. Using data inheritance (described above), the slider’s max and step do not change from their defaults, but the minimum is raised to 0.0.

Zero Runtime Overhead

Grain DDL is intended to run as a precompilation step on the developer’s machine, inserting code that is compiled in the target languages of your choice, and with the compiler of your choice. There is no need to link it into shipping executables like a config parsing library. Remove your dependence on runtime reflection.

Grain DDL runs quickly and can generate hundreds of thousands of lines of code in under a second. The software is simply a standalone executable that is under a megabyte. It is is fully supported on Windows, macOS and Linux.

Game developers do not like to slow their compiles down or impose unnecessarily heavy dependencies on developers. Grain DDL is designed to be as lightweight as possible. In practice, Grain replaces multiple code generators, simplifying building.

Salvage Your Game Data

This article only begins to cover the full featureset of Grain DDL.

As game projects scale, the same data gets defined, processed, and duplicated across a growing number of systems. But game data is more than runtime glue — game data is a long-term asset that outlives game code. It powers ports, benefits from analysis, and extends a title’s shelf life. Grain DDL puts that data under your control, in one place, with one definition — so you can protect and maximize your investment.

Grain DDL is under active development. Email grain@frogtoss.com to get early access, explore example projects, add it to your pipeline.

Page 1 of 7 ▶